Memory: The Key to Coherent, Long‑Lived LLM Agents

Memory: The Key to Coherent, Long‑Lived LLM Agents

Large language models (LLMs) have impressed us with fluent text generation, but they traditionally operate as stateless systems. Once an interaction is over, the model forgets everything unless it’s explicitly provided again. This lack of memory limits AI agents’ ability to maintain context, learn from experience, or adapt over long periods.

If we want truly coherent, long-lived AI agents – ones that can carry on meaningful conversations for weeks, remember preferences, or continually learn new facts – we must give them the ability to remember. Memory has emerged as a critical component for building more autonomous and adaptive LLM agents.

Why Memory Matters for LLM-Based Agents

Memory enables AI agents to retain and recall information beyond the fixed context window. Without memory, even the best LLMs struggle with long dialogues or multi-session consistency.

Simply increasing context length isn’t sufficient: the history grows faster than any limit, and models lose key facts buried under irrelevant data. An intelligent agent must retain important details and abstract or discard irrelevant ones, much like humans do.

Memory also lets AI agents learn and adapt, recall user preferences, avoid repeating mistakes, and reason across time. It’s essential for creating AI systems that feel continuous and coherent.

State of the Art: Memory Architectures for LLM Agents

Mem0: Dynamic Memory for Conversational Agents

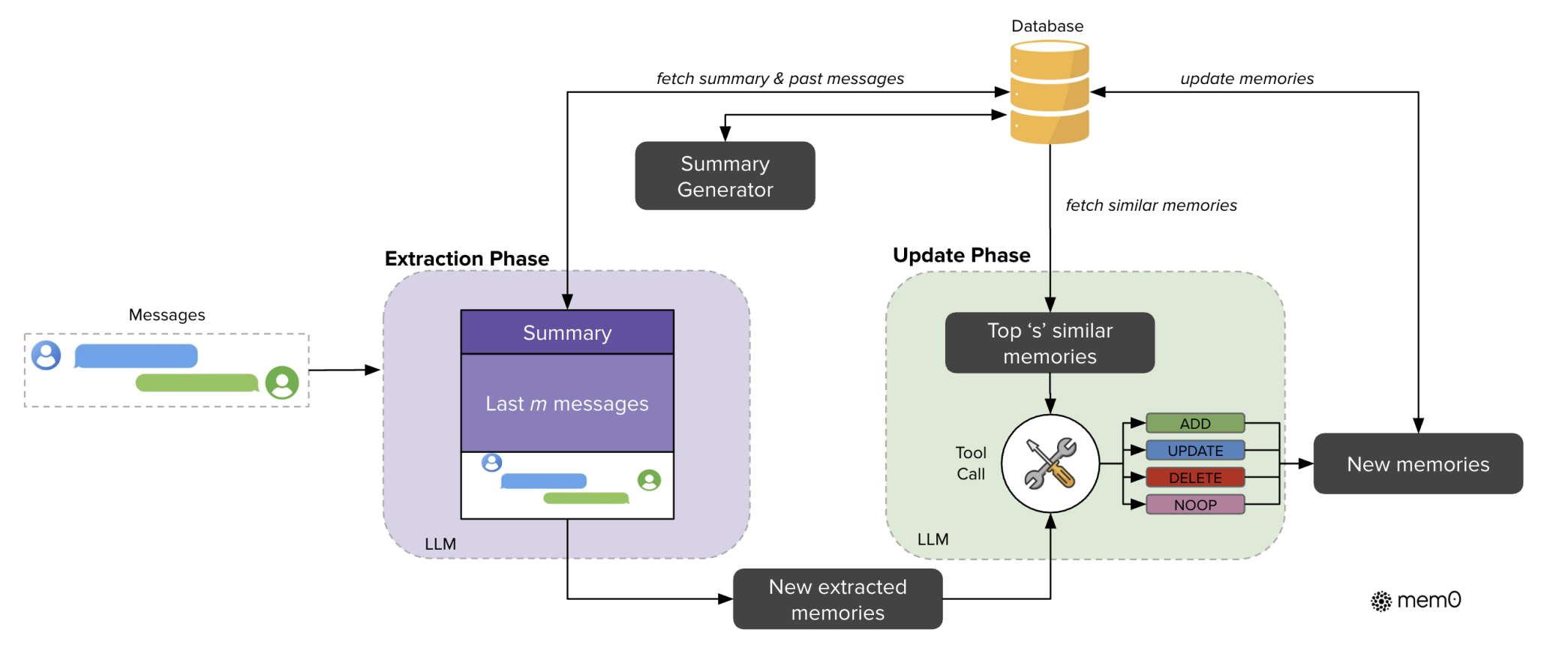

Mem0 adds a self-improving external memory to LLMs. It extracts key facts from ongoing conversations and stores them in a memory bank. Instead of passing the entire conversation to the model, Mem0 selects salient information and updates memory as needed.

Mem0 showed strong results:

- 91% latency reduction vs. full history methods.

- 90% fewer tokens used.

- 26% higher accuracy on long-context query benchmarks.

A variant, Mem0g, builds a knowledge graph for structured reasoning over entities, relations, and time. It enables multi-hop and temporal queries at the cost of slightly higher complexity.

Figure adapted from: “Mem0: An Explicit Memory Module for Efficient Long-Term Memory in LLMs”

Figure adapted from: “Mem0: An Explicit Memory Module for Efficient Long-Term Memory in LLMs”

Graphiti: Dynamic Knowledge Graph Memory

Graphiti by Zep AI focuses on enterprise and real-time agent use cases. It maintains a live, temporally-aware knowledge graph from ongoing interactions and data sources. Features include:

- Timestamps and versioning for facts.

- Hybrid search: embeddings + keyword + graph traversal.

- Sub-second retrieval latencies.

- 18.5% higher accuracy and 90% lower response latency on LongMemEval benchmark.

Graphiti is well suited for enterprise AI agents that must track evolving facts (e.g., customer tickets, system updates) and reason across sessions.

M+: Latent Long-Term Memory Inside Models

M+ extends the MemoryLLM framework. It compresses information into latent vectors and trains a co-trained retriever to query them during generation.

Key achievements:

- Scales from 20k to 160k+ token memory.

- Matches or exceeds long-context baseline models.

- Maintains similar GPU cost as standard LLMs.

Tradeoffs: memory is not human-readable (unlike Mem0/Graphiti) and requires custom retraining.

Cognitive Memory: Inspired by Human Systems

They proposed Cognitive Memory in LLMs, modeling:

- Sensory memory: raw token input.

- Short-term memory: recent conversation context.

- Long-term memory: external databases, knowledge graphs, or fine-tuned updates.

This layered design provides robustness:

- Short-term memory prevents overflow.

- Long-term memory enables persistent personalization.

- Combined systems offer better generalization and context tracking.

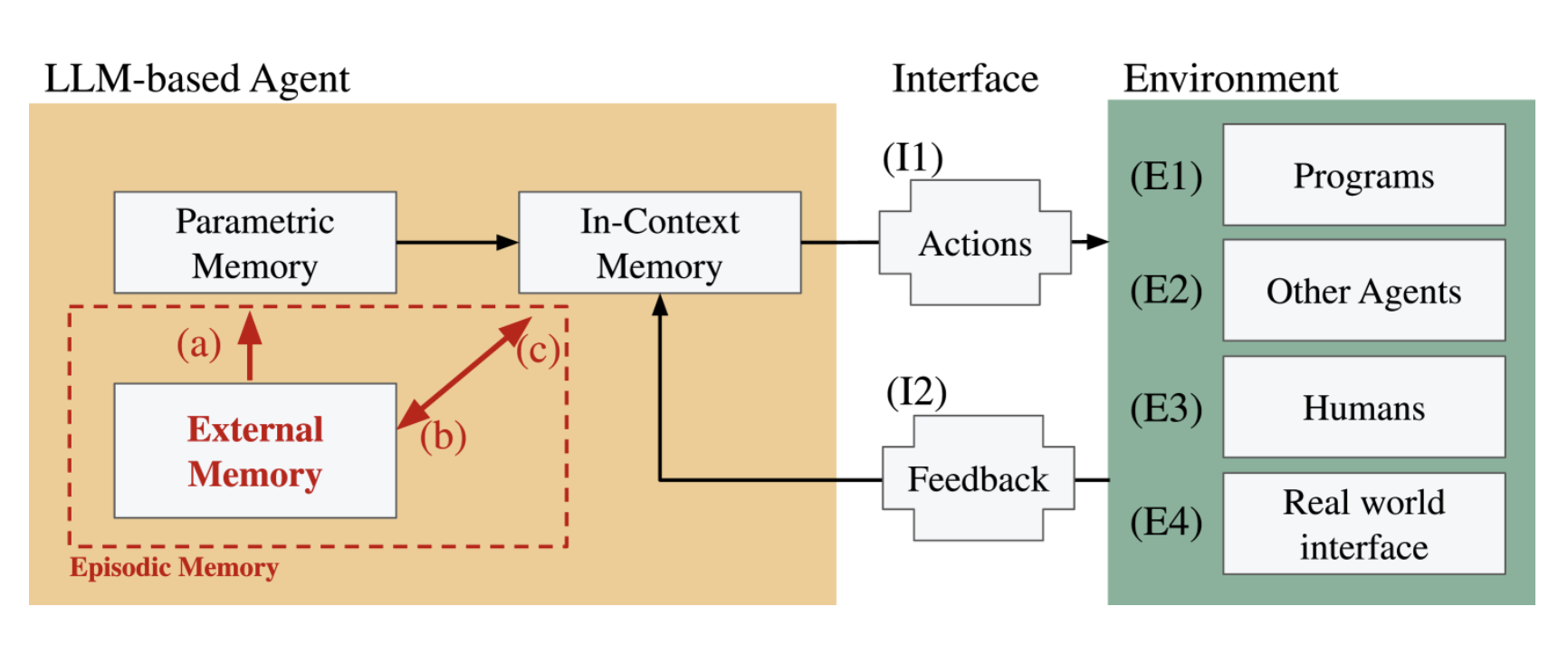

Episodic Memory: Structuring Experiences

Authors argued Episodic Memory is the missing ingredient:

- Agents segment interactions into episodes (specific tasks, sessions, or experiences).

- Stores rich contextual snapshots: what, when, where, and outcomes.

- Enables agents to recall past events explicitly and reason across time.

- Supports single-shot learning: learning from rare or one-time events.

Figure adapted from: “Episodic Memory is the Missing Piece for Long-Term LLM Agents”

Figure adapted from: “Episodic Memory is the Missing Piece for Long-Term LLM Agents”

LongMemEval: Benchmarking Memory Systems

LongMemEval is a benchmark to measure long-term memory across five skills:

- Information extraction.

- Multi-session reasoning.

- Temporal reasoning.

- Knowledge updates.

- Abstention (saying “I don’t know” when appropriate).

Most models dropped ~30% accuracy in long-term tests without specialized memory. Zep’s Graphiti and Mem0 showed strong results by combining external memory with structured storage.

Takeaways for AI Researchers and Developers

| System | Memory Type | Strengths | Trade-offs |

|---|---|---|---|

| Mem0 | External vector memory | High speed, low cost, conversational personalization | Needs good fact extraction |

| Mem0g | Knowledge graph | Structured, multi-hop reasoning | Modestly higher latency |

| Graphiti | Temporal knowledge graph | Dynamic enterprise data, version tracking | Graph DB operational overhead |

| M+ | Latent in-model memory | Huge context length extension, seamless recall | Not human readable, custom retraining |

| Cognitive Memory | Layered design | Robustness across time-scales | System integration complexity |

| Episodic Memory | Structured experience logs | Lifelong learning, explicit recall | Adds storage and retrieval steps |

Practical Tips

1. Combine memory layers No single memory mechanism fits all needs. Design your agent with layered memory:

- Short-term memory (context window, rolling dialogue buffer) for immediate coherence.

- Vector memory (e.g., embedding-based retrieval) for medium-term semantic recall.

- Structured memory (e.g., knowledge graph via Graphiti or Mem0g) for long-term, relational knowledge and temporal reasoning. This “hybrid memory stack” allows the agent to balance fast access, scalability, and reasoning capabilities.

2. Periodically summarize or consolidate knowledge Agents operating over long periods will accumulate large memory footprints. Introduce reflection or consolidation steps:

- Summarize long dialogues or complex sessions into concise memory entries (as Mem0 does).

- Merge redundant information and prune stale or outdated facts (temporal tracking via Graphiti helps). This minimizes memory bloat and prevents information drift.

3. Use benchmarks to guide development Evaluate your memory system using benchmarks like LongMemEval, which tests five key areas:

- Information extraction

- Multi-session reasoning

- Temporal reasoning

- Knowledge updates

- Abstention (knowing when the agent should say “I don’t know”) Regular benchmarking helps catch edge cases, validate improvements, and systematically reduce forgetting.

4. Build in reflection mechanisms Agents should be designed to “pause and reflect” periodically:

- After each session, let the agent summarize what happened and store it as an episode (episodic memory design).

- Reflection prevents overfilling the context window and creates structured memories tied to specific tasks or conversations.

- This strategy has shown strong performance improvements in research and mirrors how humans consolidate memories after events.

Together, these practices form a foundation for creating memory-augmented AI agents that can operate reliably and continuously across diverse tasks and time scales.

Conclusion

Memory is no longer optional for next-generation AI agents — it’s the key to autonomy. From Mem0 and Graphiti, to M+, Cognitive frameworks, and Episodic Memory, the field has moved beyond chatbots toward agents that persist, adapt, and learn across time.

As AI scientists and developers, we now have the tools to start building agents that don’t just react — they remember.

The future of AI is not stateless. It’s memory-augmented AI.

References

- Mem0: An Explicit Memory Module for Efficient Long-Term Memory in LLMs

- Mem.ai Research

- Graphiti: Knowledge Graph Memory Layer for LLM Agents

- Graphiti (Zep AI) on GitHub

- M+: Latent Long-Term Memory for Language Models

- Episodic Memory is the Missing Piece for Long-Term LLM Agents

- Exploring Memory-Augmented Language Models: Special Token and Attention-based Approaches

- LongMemEval: Evaluating Long-Term Memory Abilities of LLM Agents